1.What are the various types of Exceptions?

User defined and Predefined Exceptions.

2.Can we define exceptions twice in same block?

No.

3.What is the difference between a procedure and a function?

Functions return a single variable by value whereas procedures do not return

any variable by value. Rather they return multiple variables by passing

variables by reference through their OUT parameter.

4.Can you have two functions with the same name in a PL/SQL block?

Yes.

5.Can you have two stored functions with the same name?

Yes.

6.Can you call a stored function in the constraint of a table?

No.

7.What are the various types of parameter modes in a procedure?

IN, OUT AND INOUT.

8.What is Over Loading and what are its restrictions?

Over loading means an object performing different functions depending upon the

number of parameters or the data type of the parameters passed to it.

9.Can functions be over loaded?

Yes.

10.Can 2 functions have same name & input parameters but

differ only by return data type?

No.

11.What are the constructs of a procedure, function or a package?

The constructs of a procedure, function or a package are:

variables and constants, cursors, exceptions

12.Why create or Replace and not Drop and recreate procedures?

So that Grants are not dropped.

13.Can you pass parameters in packages? How?

Yes. You can pass parameters to procedures or functions in a package.

14.What are the parts of a

database trigger?

The parts of a trigger are:

A triggering event or statement

A trigger restriction

A trigger action

15.What are the various types of database triggers ?

There are 12 types of triggers, they are combination of :

Insert, Delete and Update Triggers.

Before and After Triggers.

Row and Statement Triggers.

(3*2*2=12)

16.What is the advantage of a stored procedure over a database

trigger ?

We have control over the firing of a stored procedure but we have no control

over the firing of a trigger.

17.What is the maximum no. of statements that can be specified in

a trigger statement ?

One.

18.Can views be specified in a trigger statement?

No

19.What are the values of :new and :old in Insert/Delete/Update

Triggers ?

INSERT : new = new value, old = NULL

DELETE : new = NULL, old = old value

UPDATE : new = new value, old = old value

20.What are cascading triggers? What is the maximum no of

cascading triggers at a time?

When a statement in a trigger body causes another trigger to be fired, the

triggers are said to be cascading. Max = 32.

21.What are mutating triggers ?

A trigger giving a SELECT on the table on which the trigger is written.

22.What are constraining triggers ?

A trigger giving an Insert/Updat e on a table having referential integrity

constraint on the triggering table.

23.Describe Oracle database's physical and logical structure ?

Physical : Data files, Redo Log files, Control file.

Logical : Tables, Views, Tablespaces, etc.

24.Can you increase the size of a tablespace ? How ?

Yes, by adding datafiles to it.

25.Can you increase the size of datafiles? How?

No (for Oracle 7.0)

Yes (for Oracle 7.3 onwards by using the Resize clause ----- Confirm !!).

26.What is the use of Control files ?

Contains pointers to locations of various data files, redo log files, etc.

27.What is the use of Data Dictionary ?

Used by Oracle to store information about various physical and logical Oracle

structures e.g. Tables, Tablespaces, datafiles, etc

28.What are the advantages of clusters ?

Access time reduced for joins.

29.What are the disadvantages of clusters ?

The time for Insert increases.

30.Can Long/Long RAW be clustered ?

No.

31.Can null keys be entered in cluster index, normal index ?

Yes.

32.Can Check constraint be used for self referential integrity ?

How ?

Yes. In the CHECK condition for a column of a table, we can reference some

other column of the same table and thus enforce self referential integrity.

33.What are the min. extents allocated to a rollback extent ?

Two

34.What are the states of a rollback segment ? What is the

difference between partly available and needs recovery ?

The various states of a rollback segment are :

ONLINE, OFFLINE, PARTLY AVAILABLE, NEEDS RECOVERY and INVALID.

35.What is the difference between unique key and primary key ?

Unique key can be null; Primary key cannot be null.

36.An insert statement followed by a create table statement

followed by rollback ? Will the rows be inserted ?

No.

37.Can you define multiple savepoints ?

Yes.

38.Can you Rollback to any savepoint ?

Yes.

39.What is the maximum no. of columns a table can have ?

254.

40.What is the significance of the & and && operators

in PL SQL ?

The & operator means that the PL SQL block requires user input for a

variable. The && operator means that the value of this variable should

be the same as inputted by the user previously for this same variable.

If a transaction is very large, and the rollback segment is not able to hold

the rollback information, then will the transaction span across different

rollback segments or will it terminate ?

It will terminate (Please check ).

41.Can you pass a parameter to a cursor ?

Explicit cursors can take parameters, as the example below shows. A cursor

parameter can appear in a query wherever a constant can appear.

CURSOR c1 (median IN NUMBER) IS

SELECT job, ename FROM emp WHERE sal > median;

42.What are the various types of RollBack Segments ?

Public Available to all instances

Private Available to specific instance

43.Can you use %RowCount as a parameter to a cursor ?

Yes

44.Is the query below allowed :

Select sal, ename Into x From emp Where ename = 'KING'

(Where x is a record of Number(4) and Char(15))

Yes

45.Is the assignment given below allowed :

ABC = PQR (Where ABC and PQR are records)

Yes

46.Is this for loop allowed :

For x in &Start..&End Loop

Yes

47.How many rows will the following SQL return :

Select * from emp Where rownum < 10;

9 rows

48.How many rows will the following SQL return :

Select * from emp Where rownum = 10;

No rows

49.Which symbol preceeds the path to the table in the remote

database ?

@

50.Are views automatically updated when base tables are updated ?

Yes

51.Can a trigger written for a view ?

No

52.If all the values from a cursor have been fetched and another

fetch is issued, the output will be : error, last record or first record ?

Last Record

53.A table has the following data : [5, Null, 10]. What will the

average function return ?

7.5

54.Is Sysdate a system variable or a system function?

System Function

55.Consider a sequence whose currval is 1 and gets incremented by

1 by using the nextval reference we get the next number 2. Suppose at this

point we issue an rollback and again issue a nextval. What will the output be ?

3

56.Definition of relational DataBase by Dr. Codd (IBM)?

A Relational Database is a database where all data visible to the user is

organized strictly as tables of data values and where all database operations

work on these tables.

57.What is Multi Threaded Server (MTA) ?

In a Single Threaded Architecture (or a dedicated server configuration) the

database manager creates a separate process for each database user. But in MTA

the database manager can assign multiple users (multiple user processes) to a

single dispatcher (server process), a controlling process that queues request

for work thus reducing the databases memory requirement and resources.

58.Which are initial RDBMS, Hierarchical & N/w database ?

RDBMS - R system

Hierarchical - IMS

N/W - DBTG

59.Difference between Oracle 6 and Oracle 7

ORACLE 7 ORACLE 6

Cost based optimizer · Rule based optimizer

Shared SQL Area · SQL area allocated for each user

Multi Threaded Server · Single Threaded Server

Hash Clusters · Only B-Tree indexing

Roll back Size Adjustment · No provision

Truncate command · No provision

Database Integrity

Constraints · Provision at Application Level

Stored procedures, functions

packages & triggers · No provision

Resource profile limit. It

prevents user from running

away with system resources · No provision

Distributed Database · Distributed Query

Table replication & snapshots· No provision

Client/Server Tech. · No provision

60.What is Functional Dependency

Given a relation R, attribute Y of R is functionally dependent on attribute X

of R if and only if each X-value has associated with it precisely one -Y value

in R

61.What is Auditing ?

The database has the ability to audit all actions that take place within it.

a) Login attempts, b) Object Accesss, c) Database Action

Result of Greatest(1,NULL) or Least(1,NULL)

NULL

62.While designing in client/server what are the 2 imp. things to

be considered ?

Network Overhead (traffic), Speed and Load of client server

63.What are the disadvantages of SQL ?

Disadvantages of SQL are :

· Cannot drop a field

· Cannot rename a field

· Cannot manage memory

· Procedural Language option not provided

· Index on view or index on index not provided

· View updation problem

64.When to create indexes ?

To be created when table is queried for less than 2% or 4% to 25% of the table

rows.

65.How can you avoid indexes ?

TO make index access path unavailable

· Use FULL hint to optimizer for full table scan

· Use INDEX or AND-EQUAL hint to optimizer to use one index or set to

indexes instead of another.

· Use an expression in the Where Clause of the SQL.

66.What is the result of the following SQL :

Select 1 from dual

UNION

Select 'A' from dual;

Error

67.Can database trigger written on synonym of a table and if it

can be then what would be the effect if original table is accessed.

Yes, database trigger would fire.

68.Can you alter synonym of view or view ?

No

69.Can you create index on view?.

No.

70.What is the difference between a view and a synonym?

Synonym is just a second name of table used for multiple link of database. View

can be created with many tables, and with virtual columns and with conditions.

But synonym can be on view.

71.What is the difference between alias and synonym?

Alias is temporary and used with one query. Synonym is permanent and not used

as alias.

72.What is the effect of synonym and table name used in same

Select statement?

Valid

73.What's the length of SQL integer?

32 bit length

74.What is the difference between foreign key and reference key?

Foreign key is the key i.e. attribute which refers to another table primary

key.

Reference key is the primary key of table referred by another table.

75.Can dual table be deleted, dropped or altered or updated or

inserted ?

Yes

76.If content of dual is updated to some value computation takes

place or not ?

Yes

77.If any other table same as dual is created would it act similar

to dual?

Yes

78.For which relational operators in where clause, index is not

used?

<>, like '% ...' is NOT functions, field +constant, field || ''

79.Assume that there are multiple databases running on one

machine. How can you switch from one to another?

Changing the ORACLE_SID

80.What are the advantages of Oracle?

Portability: Oracle is ported to more platforms than any of its

competitors, running on more than 100 hardware platforms and 20 networking

protocols.

Market Presence: Oracle is by far the largest RDBMS vendor and spends

more on R & D than most of its competitors earn in total revenue. This

market clout means that you are unlikely to be left in the lurch by Oracle and

there are always lots of third party interfaces available.

Backup and Recovery: Oracle provides industrial strength support for

on-line backup and recovery and good software fault tolerence to disk failure.

You can also do point-in-time recovery.

Performance: Speed of a 'tuned' Oracle Database and application is quite

good, even with large databases. Oracle can manage > 100GB databases.

Multiple database support: Oracle has a superior ability to manage

multiple databases within the same transaction using a two-phase commit

protocol.

81.What is a forward declaration? What is its use?

PL/SQL requires that you declare an identifier before using it. Therefore, you

must declare a subprogram before calling it. This declaration at the start of a

subprogram is called forward declaration. A forward declaration consists of a

subprogram specification terminated by a semicolon.

82.What are actual and formal parameters?

Actual Parameters: Subprograms pass information using parameters. The variables

or expressions referenced in the parameter list of a subprogram call are actual

parameters. For example, the following procedure call lists two actual

parameters named emp_num and amount:

Eg. raise_salary(emp_num, amount);

Formal Parameters : The variables declared in a subprogram

specification and referenced in the subprogram body are formal parameters. For

example, the following procedure declares two formal parameters named emp_id

and increase:

Eg. PROCEDURE raise_salary (emp_id INTEGER, increase REAL) IS current_salary

REAL;

83.What are the types of Notation?

Position, Named, Mixed and Restrictions.

84.What all important parameters of the init.ora are supposed to

be increased if you want to increase the SGA size?

In our case, db_block_buffers was changed from 60 to 1000 (std values are 60,

550 & 3500) shared_pool_size was changed from 3.5MB to 9MB (std values are

3.5, 5 & 9MB) open_cursors was changed from 200 to 300 (std values are 200

& 300) db_block_size was changed from 2048 (2K) to 4096 (4K) {at the time

of database creation}.

The initial SGA was around 4MB when the server RAM was 32MB and The new SGA was

around 13MB when the server RAM was increased to 128MB.

85.If I have an execute privilege on a procedure in another users

schema, can I execute his procedure even though I do not have privileges on the

tables within the procedure ?

Yes

86.What are various types of joins?

Equijoins, Non-equijoins, self join, outer join

87.What is a package cursor?

A package cursor is a cursor which you declare in the package specification

without an SQL statement. The SQL statement for the cursor is attached dynamically

at runtime from calling procedures.

88.If you insert a row in a table, then create another table and then say

Rollback. In this case will the row be inserted?

Yes. Because Create table is a DDL which commits automatically as soon as it is

executed. The DDL commits the transaction even if the create statement fails

internally (eg table already exists error) and not syntactically.

89.What are the various types of queries?

Normal Queries

Sub Queries

Co-related queries

Nested queries

Compound queries

90.What is a transaction?

A transaction is a set of SQL statements between any two COMMIT and ROLLBACK

statements.

91.What is implicit cursor and how is it used by Oracle ?

An implicit cursor is a cursor which is internally created by Oracle. It is

created by Oracle for each individual SQL.

92.Which of the following is not a schema object : Indexes,

tables, public synonyms, triggers and packages ?

Public synonyms

93.What is the difference between a view and a snapshot ?

94.What is PL/SQL?

PL/SQL is Oracle's Procedural Language extension to SQL. The language includes

object oriented programming techniques such as encapsulation, function

overloading, information hiding (all but inheritance), and so, brings

state-of-the-art programming to the Oracle database server and a variety of

Oracle tools.

95.Is there a PL/SQL Engine in SQL*Plus?

No. Unlike Oracle Forms, SQL*Plus does not have a PL/SQL engine. Thus, all your

PL/SQL are send directly to the database engine for execution. This makes it

much more efficient as SQL statements are not stripped off and send to the

database individually.

96.Is there a limit on the size of a PL/SQL block?

Currently, the maximum parsed/compiled size of a PL/SQL block is 64K and the

maximum code size is 100K. You can run the following select statement to query

the size of an existing package or procedure.

SQL> select * from dba_object_size where name = 'procedure_name'

97.Can one read/write files from PL/SQL?

Included in Oracle 7.3 is a UTL_FILE package that can read and write files. The

directory you intend writing to has to be in your INIT.ORA file (see

UTL_FILE_DIR=... parameter). Before Oracle 7.3 the only means of writing a file

was to use DBMS_OUTPUT with the SQL*Plus SPOOL command.

DECLARE

fileHandler UTL_FILE.FILE_TYPE;

BEGIN

fileHandler := UTL_FILE.FOPEN('/home/oracle/tmp', 'myoutput','W');

UTL_FILE.PUTF(fileHandler, 'Value of func1 is %s\n', func1(1));

UTL_FILE.FCLOSE(fileHandler);

END;

98.How can I protect my PL/SQL source code?

PL/SQL V2.2, available with Oracle7.2, implements a binary wrapper for PL/SQL

programs to protect the source code. This is done via a standalone utility that

transforms the PL/SQL source code into portable binary object code (somewhat

larger than the original). This way you can distribute software without having

to worry about exposing your proprietary algorithms and methods. SQL*Plus and

SQL*DBA will still understand and know how to execute such scripts. Just be

careful, there is no "decode" command available.

The syntax is:

wrap iname=myscript.sql oname=xxxx.yyy

99.Can one use dynamic SQL within PL/SQL? OR Can you use a DDL in

a procedure ? How ?

From PL/SQL V2.1 one can use the DBMS_SQL package to execute dynamic SQL

statements.

Eg: CREATE OR REPLACE PROCEDURE DYNSQL

AS

cur integer;

rc integer;

BEGIN

cur := DBMS_SQL.OPEN_CURSOR;

DBMS_SQL.PARSE(cur,'CREATE TABLE X (Y DATE)', DBMS_SQL.NATIVE);

rc := DBMS_SQL.EXECUTE(cur);

DBMS_SQL.CLOSE_CURSOR(cur);

END;

1. What is Referential Integrity rule?

Differentiate between

2. Delete & Truncate command.

3. Implicit Cursor & Explicit Cursor.

4. Ref. key & Foreign key.

5. Where & Having Clause.

6. What are various kinds of Integrity Constraints in Oracle?

7. What are various kind of joins?

8. What is Raise_Application_Error?

9. What are various kinds of exceptions in Oracle?

10. Normal Forms

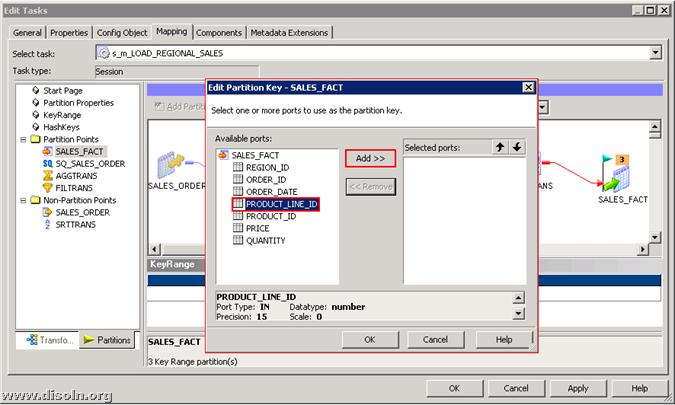

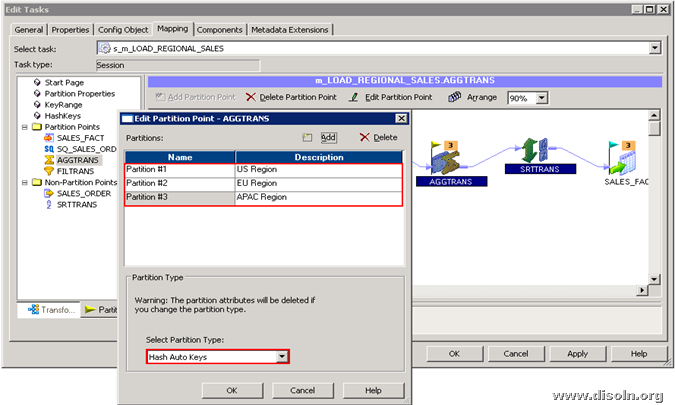

Below images shows the steps involved in setting up the key range partition.

Below images shows the steps involved in setting up the key range partition.