Informatica PowerCenter Session Partitioning can be effectively used for parallel data processing and achieve faster data delivery. Parallel data processing performance is heavily depending on the additional hardware power available. In additional to that, it is important to choose the appropriate partitioning algorithm or partition type. In this article lets discuss the optimal session partition settings.

Business Use Case

Partition Tutorial Series

Part I : Partition Introduction.

Part II : Partition Implementation.

Part III : Dynamic Partition.

Part I : Partition Introduction.

Part II : Partition Implementation.

Part III : Dynamic Partition.

Lets consider a business use case to explain the implementation of appropriate partition algorithms and configuration.

Daily sales data generated from three sales region need to be loaded into an Oracle data warehouse. The sales volume from three different regions varies a lot, hence the number of records processed for every region varies a lot. The warehouse target table is partitioned based on product line.

Below is the simple structure of the mapping to get the assumed functionality.

Below is the simple structure of the mapping to get the assumed functionality.

Pass-through Partition

A pass-through partition at the source qualifier transformation is used to split the source data into three different parallel processing data sets. Below image shows how to setup pass through partition for three different sales regions.

Once the partition is setup at the source qualifier, you get additional Source Filter option to restrict the data which corresponds to each partition. Be sure to provide the filter condition such that same data is not processed through more than one partition and data is not duplicated. Below image shows three additional Source Filters, one per each partition.

Round Robin Partition

Since the data volume from three sales region is not same, use round robin partition algorithm at the next transformation in pipeline. So that the data is equally distributed among the three partitions and the processing load is equally distributed. Round robin partition can be setup as shown in below image.

Hash Auto Key Partition

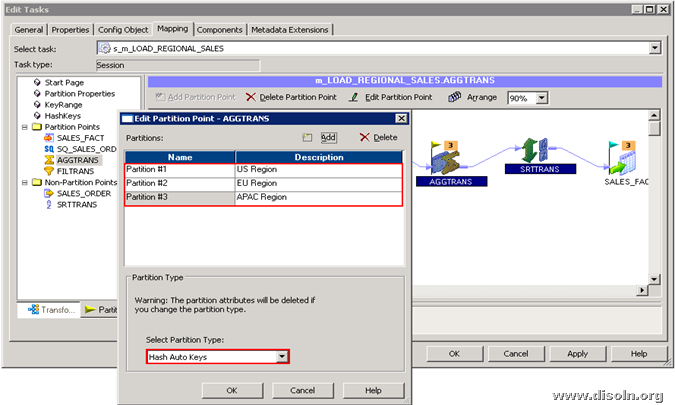

At the Aggregator transformation, data need to redistribute across the partitions to avoid the potential splitting of aggregator groups. Hash auto key partition algorithm will make sure the data from different partition is redistributed such that records with the same key is in the same partition. This algorithm will identify the keys based on the group key provided in the transformation.

Processing records of the same aggregator group in different partition will result in wrong result.

Processing records of the same aggregator group in different partition will result in wrong result.

Key Range Partition

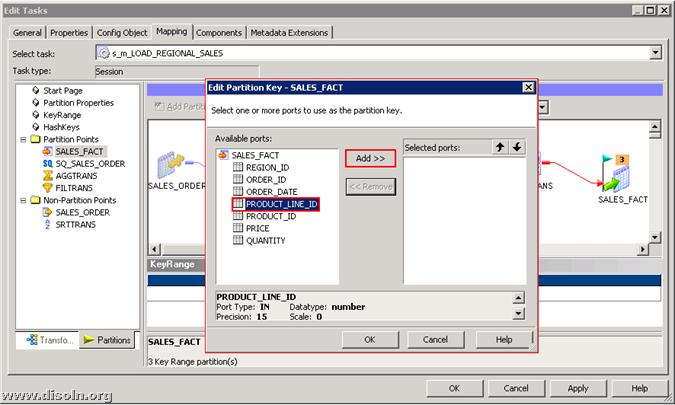

Use Key range partition when required to distribute the records among partitions based on the range of values of a port or multiple ports.

Here the target table is range partitioned on product line. Create a range partition on target definition on PRODUCT_LINE_ID port to get the best write throughput.

Below images shows the steps involved in setting up the key range partition.

Below images shows the steps involved in setting up the key range partition.Click on Edit Keys to define the ports on which the key range partition is defined.

A pop up window shows the list of ports in the transformation, Choose the ports on which the key range partition is required.

Now give the value start and end range for each partition as shown below.

We did not have to use Hash User Key Partition and Database Partition algorithm in the use case discussed here.

Hash User Key partition algorithm will let you choose the ports to group rows among partitions. This algorithm can be used in most of the places where hash auto key algorithm is appropriate.

Database partition algorithm queries the database system for table partition information. It reads partitioned data from the corresponding nodes in the database. This algorithm can be applied either on the source or target definition.

Reference: link

Reference: link

No comments:

Post a Comment